In my work, I get deeply involved in both computer languages (like Java) and natural languages (like English). Programmers spend a lot of time thinking about and debating the relative merits and shortcomings of different computer languages. Often these debates take on the aspect of a religious conflict, but they are not entirely worthless, because programming languages can change over time, as language designers add new features and remove inconsistencies. The work of deciding on and incorporating the improvements is a difficult process, not just because of the technical difficulty, but because the changes require buy-in and approval from the language community. To propose a new feature, a language designer writes a detailed document describing a problem and proposing a solution. These documents have names like “Java Specification Request” (JSR) and “Python Enhancement Proposal” (PEP). After the proposal has been subjected to sufficient study and scrutiny, a standards organization takes a vote to decide whether or not to adopt it.

In my research I often come up against some particularly annoying feature of English, and think to myself, “hmmm, the language would really be so much better if we fixed this inconsistency”. Unfortunately, there is no mechanism for proposing and adopting improvements to English that is analogous to the JSR or PEP. While English does change, this change happens in a haphazard and unplanned way. The gradual drift in the way the language is used means that, over time, it becomes more and more cluttered and inconsistent. This disorganization causes the language to become harder to learn, harder to understand, and less useful for the purposes of communication.

Gandhi advises us to “be the change you want to see in the world” [1]. In that spirit, I submit this Plan to Fix English (P2FE) for consideration and study by English speakers everywhere. I believe this proposal, if widely adopted, will make the language more consistent and precise. Even if you do not accept this particular improvement, I hope you endorse the general principle that we can and should work together to make English better. And if you don’t care about fixing English, you might at least enjoy the discussion of grammar theory, which begins with a description of the case phenomenon in relation to pronouns.

Pronouns and the Case Phenomenon

Many languages exhibit the linguistic pattern known as case. Case is a grammatical phenomenon where a noun must be conjugated in a certain way depending on its relationship to a verb. Grammarians commonly distinguish between the nominative case, which is used for the subject of the verb, the accusative case, used for the object, and the reflexive case, used when the same entity is both the subject and object.

Some languages exhibit case phenomena quite strongly, requiring every noun to be conjugated as appropriate. English exhibits only weak, vestigial case rules, which refer only to pronouns. The rules are illustrated by the following sentences:

- He bought a new car.

- Him bought a new car (***).

- She told him about the murder.

- She told he about the murder (***).

- The lawyer talked to us for three hours.

- The lawyer talked to we for three hours (***).

- John talked himself (John) into buying a new car.

- John talked him (John) into buying a new car. (***)

- John talked him (Mike) into buying a new car.

- John talked himself (Mike) into buying a new car. (***)

As you can see, even though the pronouns ‘he’, ‘him’ and ‘himself’ are semantically identical, we need to conjugate them properly in order to satisfy grammatical constraints.

The grammatical rule of case can be expressed in terms of the role that the pronoun is fulfilling in the sentence. For the time being, let’s consider a simplified set of three roles: subject, object, and prepositional complement. Then the rules are as follows:

- If the pronoun fulfills the subject role, it must be in the nominative case.

- If the pronoun acts as the object or prepositional complement, it must be in the accusative case.

- Exception: if the pronoun in case 2 refers to the subject of the sentence, it must be in the reflexive case.

This set of rules explains the pattern of grammaticality shown in the above sentences. Sentence #2 is ungrammatical because it uses an accusative case pronoun in the subject role, and sentence #4 is ungrammatical because it uses a nominative case pronoun in the object role. Sentence #6 is ungrammatical because it uses a nominative case pronoun as a preposition complement.

(I won’t discuss reflexive pronouns in this post, but I’ve included some sentences that illustrate their usage pattern. Using a reflexive pronoun instead of an accusative one never makes a sentence ungrammatical. Instead, it precludes certain interpretations about which noun the pronoun refers to. For example, sentence #8 is marked incorrect because the pronoun ‘him’ cannot refer to ‘John’; it must refer to some other person.)

The Ozora parser can handle these simple sentences quite easily, because the rules described above are easy to encode in computational terms. As you can see below, it parses the grammatical sentences well, but refuses to accept the ungrammatical sentences, producing instead a red gen_srole link that indicates that it found no acceptable parse.

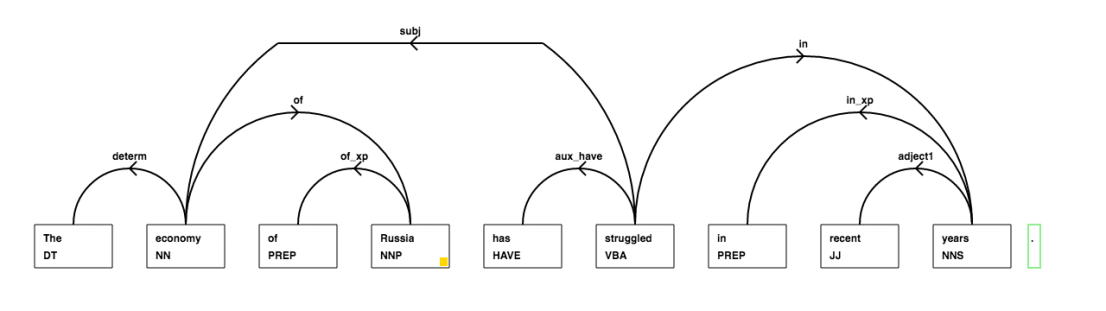

The fact that this knowledge is built into the system helps with parsing more complex sentences, by allowing the system to prune away bad candidate parses early. This refusal to parse ungrammatical sentences is a distinctive feature of the Ozora parser. Other parsing systems will happily produce output for the bad sentences; here’s the output from the Microsoft Linguistic Services API:

The Head/Tail Question

Many grammar theories, including the one used in the Ozora parser, are described in terms of merge operations. A merge operation takes a head phrase and a tail phrase and joins them together. The resulting merged structure inherits the properties of the head phrase:

- The headword of the merged structure is the headword of the head phrase

- The syntactic type (NP/VP/etc) of the merged structure is the syntactic type of the head phrase

Because of this asymmetry in the inheritance rules between the head phrase and the tail phrase, it is very important, when writing down a grammar rule, to know which substructure is the head. Fortunately, often it is quite obvious which is which, as in the following examples:

- unusually large car

- swimming in the river

- My mother’s necklace

In the first example, “unusually large” is the tail phrase, while ‘car’ is the head phrase, so the result is an NP with the headword ‘car’. Next, the head phrase ‘swimming’ joins with the tail phrase “in the river”, and the result is a VP, with the headword ‘swimming’. Finally, the head phrase ‘necklace’ joins with the tail phrase “my mother’s”, so that the resulting phrase is a NP with the headword ‘necklace’.

The basic phrases listed above are easy, but when one is dealing with more complex grammatical patterns, but the question becomes more subtle. Over time, I’ve built up a fair bit of experience and intuition related to this problem. One important rule of thumb is that the headword and the syntactic type should act as a linguistic summary of the entire phrase, and this summary should provide most of the information necessary to determine whether the phrase can fit into other places. To understand this idea, consider the following sentences:

- John bought a car.

- John bought the shiny new red car that had been parked in the dealer’s lot for five weeks.

In both sentences, the object of the word ‘bought’ is a noun phrase headed by the word ‘car’. That’s mostly all you need to know to determine that the phrase can fit into the object role. Even though the object phrase in the second sentence is quite long, the whole thing can be summarized as an NP headed with ‘car’. With just this summary, we can correctly deduce that the long phrase is a (semantically and syntactically) valid object of the verb ‘bought’. In contrast, if our headword-identification logic was broken so that we guessed that the headword was the verb ‘parked’, then we would incorrectly conclude that the phrase couldn’t fit into the slot it actually occupies. Similarly, if the headword was ‘dealer’, the phrase would be syntactically acceptable, but very odd semantically, because ‘dealer’ is an unlikely choice for the object of ‘bought’.

In addition to the logical arguments in favor of a grammar formalism based on the head/tail distinction, there is also a strong statistical rationale. Consider the following sentence:

- The boys will eat pizza and hamburgers.

Here’s the parse that the Ozora parser builds from the sentence:

The Ozora parser works by finding a parse description that maximizes the probability of a sentence. The language model is built from a large number of modular submodels. The most important submodel is the one used to assign probabilities to particular tailwords, given the headword and the semantic role. This type of model is both statistically powerful and intuitively natural. Given the headword and role as context information, humans can make strong intuitive judgments about what tailwords are plausible. In the example sentence, we see that word “boys” appears as the tailword of “eat” with the subject role. From a semantic point of view, that is perfectly reasonable. Similarly ‘pizza’ is a highly plausible tailword for “eat” in the object role. On the other hand, unless the text in question is some kind of bizarre fast food horror novel, “pizza” is a wildly implausible as the tailword for “eat” in the subject role.

These strong intuitive judgments generally agree with the actual statistics of the text data: you will probably need to search through millions of books or newspaper articles before finding a sentence where “pizza” is the subject of “eat”, but it will be easy to find one where it is the object. Because of its statistical power, this strategy of language modeling helps us to improve both the overall performance of the model and also the accuracy of the parser.

Partial Determiners

The third topic of this post relates to partial determiners, which are words such as all, most, several, and none. They are a common and important feature of English, allowing speakers to refer to groups with a greater or lesser degree of participation. The typical pattern is to complete the partially determined phrase by putting connecting the determiner to a plural noun or uncountable noun with the word of:

- All of the pizza

- Most of the boys

- Some of water

- None of his friends

- A few of the Italians

This pattern seems intuitively easy to understand, so we might expect that it will be easy to describe using a grammar formalism. But when you actually try to do this, a thorny question arises: which phrase is the head and which is the tail? For the phrase “all the pizza”, is the headword “pizza”, or is it “all”? To answer this, let’s look at a few sentences that use partial determiners:

- The boys ate all the pizza.

- The mayor spoke to some of the reporters.

- None of the criminals escaped from jail.

The arguments about semantic and statistical plausibility given above suggest that in these constructions the main noun should be the headword. ‘Pizza’ is a highly plausible target for the object of ‘eat’; ‘reporters’ is a great target for the to-complement of ‘speak’, and so on. In contrast, the words ‘all’, ‘some’, and ‘none’ have a vanilla character; they’re not especally implausible in those contexts, but they’re not especially strong choices either.

In addition to this statistical argument, there’s another reason to choose the core noun as the headword instead of the partial determiner. In the small community of grammar formalism developers, there is a rule of thumb that says “Function words should not be headwords”. This rule is based on practicality concerns. Imagine you are a journalist with data science training, and you want to find articles where a particular individual (say, John Kerry) talked to reporters [2]. This can be expressed as a relatively simple query: find phrases where the main verb is ‘talk’, the subject is ‘John Kerry’, and the verb connects to ‘reporter’ with a to role. Then consider this sentence:

- Secretary of State John Kerry talked to some of the reporters about the Middle East crisis.

If the core noun acts as the headword, then our simple query will return the above sentence. But if the partial determiner is the headword, we’ll need to make the query significantly more complex to retrieve the sentence.

The Plan to Fix English

To understand my proposal, spend a moment studying the following sentences:

- None of us want to go to school tomorrow.

- None of we want to go to school tomorrow. (***)

- Some of them thought that the president should resign.

- Some of they thought that the president should resign. (***)

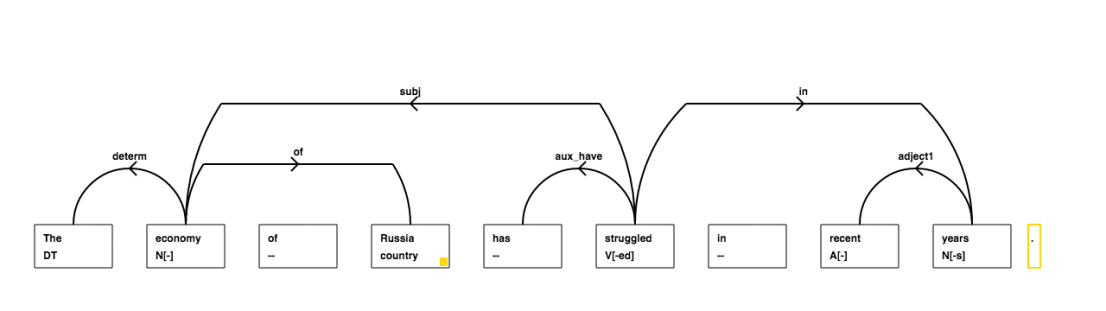

Let’s see how the Ozora parser responds to the first two sentences:

As you can see, the parser gets it exactly reversed. It happily generates a parse for the ungrammatical sentence, while refusing to accept the grammatical sentence (the gen_srole indicates a parse failure).

Why does it fail on such a simple pair of sentences? The reason relates to the issues I mentioned above. The Ozora grammar system handles partial determiner phrases by treating the main noun (‘us’ or ‘we’) as the headword, and the quantifier (‘none’) as the tailword. That means the system considers the headword of the phrase “none of us” to be ‘us’, not ‘none’. Furthermore, the system knows about the rules of case, so it refuses to allow ‘us’ to fulfill the subject role.

This brings us to the substance of this Plan to Fix English. The proposal is simply to reverse our intuitive judgments and accept the parser’s response to the above sentences as grammatically correct.

This may seem like a ridiculous argument. I’m effectively saying, “you all should change the way you speak to make my life easier.” In fact, that’s exactly what I’m saying, but it’s not as unreasonable as it sounds at first. The Ozora parser is built on a set of logical rules about grammar. In a large majority of cases, the parser agrees with our intuitive grammatical judgments. In many other cases, the parser gets the wrong answer, because of an implementation bug or a legitimate shortcoming of its grammar system. In those instances, I’ll frankly agree that the responsibility to fix the problem is entirely mine. But in this particular case, the parser is faithfully following the logical rules that I described above. In other words, it disagrees with our intuition because our intuition is logically inconsistent.

I could, of course, write some additional code to implement a special case exception that would allow the parser to agree with our intuition. But I don’t want to do that, because the extra code would be unavoidably ugly, since the it is forced to reflect ugliness in the underlying material. That brings me back to the appeal I made at the beginning of the post. English is a priceless shared resource which we bequeath to our children and which upholds our civilization and culture. But as the language drifts, it is gradually becoming more and more disorganized. The situation doesn’t seem terrible today, but in time the language may become so muddy and illogical that we lose the ability to discuss sophisticated concepts or to compose beautiful works of literature. We can’t stop linguistic drift, but we might at least be able to guide the movement in the direction of greater elegance and precision, instead of towards confusion and sloppiness.

I hope you found this P2FE to be somewhat thought-provoking, even if you don’t agree with the specific conclusions. Stay tuned for the next installment!

[1] – This may be a false attribution, but it sounds like something Gandhi might say, and anyway it is good advice.

[2] – You can actually try this query on Ozora’s online demo system. As of October 2017, the top result is the following sentence from this article:

Kerry was talking to reporters after meetings in Beijing with top Chinese officials , including President Xi Jinping.